AI Test Agents: Mastering AI Test Agents in Voice AI Testing

James Zammit

Co-founder & CEO @ Roark

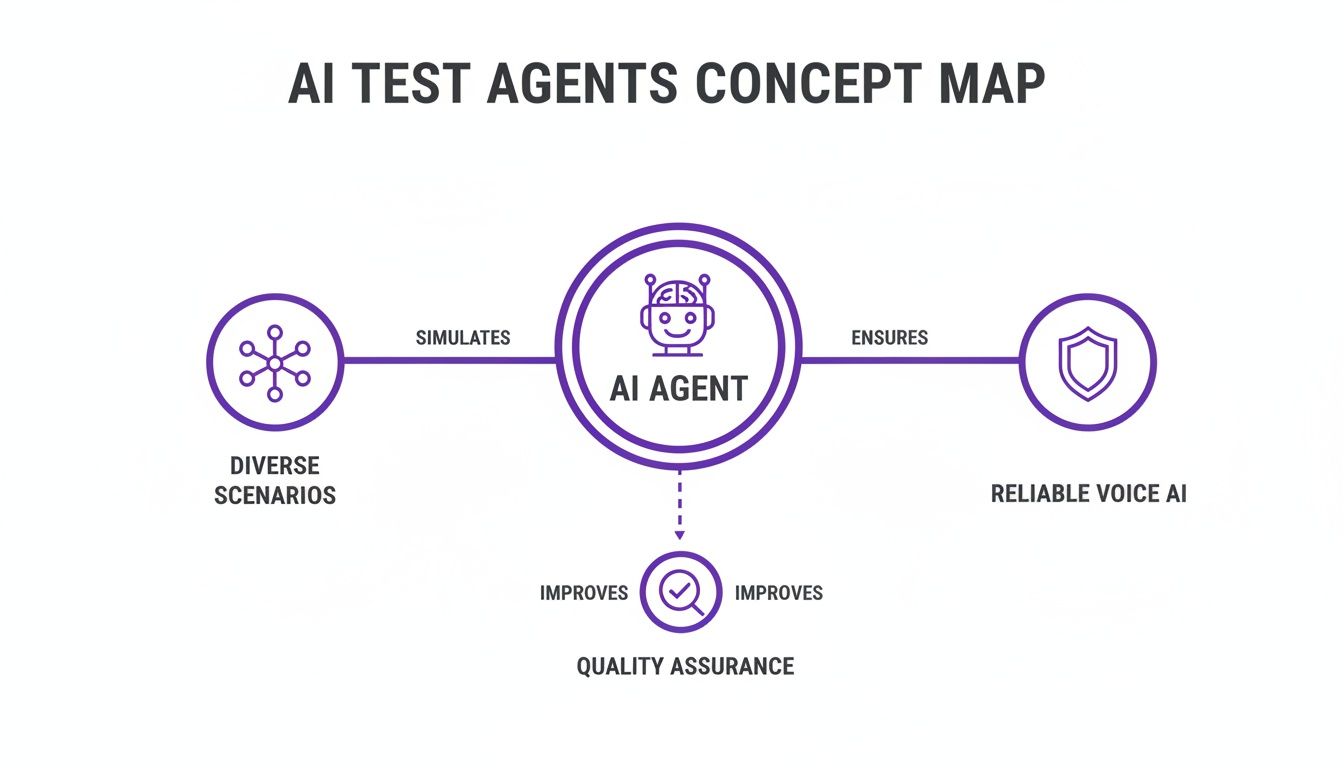

Think of it this way: what if you could hire an entire team of expert mystery shoppers to call your Voice AI system around the clock, testing every possible thing a customer might say or do? That's exactly what an AI test agent does.

These aren't just simple scripts. They are specialized AI systems built to autonomously simulate real-world user interactions, making sure your conversational AI is solid, effective, and ready for whatever humans throw at it.

What Are AI Test Agents and Why Do They Matter?

When we think about traditional software QA, it's usually about predictable, scripted tests. Click a button, get an expected result. Simple. But real human conversation is the complete opposite of predictable, and this is where old-school manual testing just falls apart for Voice AI.

A manual QA team simply can't keep up with the sheer scale and chaos of real-world user calls. People get tired, their testing is naturally limited, and trying to organize thousands of simultaneous test calls is a logistical and financial nightmare. This approach always leaves huge blind spots where bugs and frustrating user experiences love to hide.

The Shift From Manual Scripts to Autonomous Simulation

AI test agents represent a complete rethinking of how we do quality assurance for conversational AI. Instead of just mindlessly following a script, these agents are designed to think, act, and react like actual users. It creates a testing environment that's dynamic and incredibly realistic.

They offer some massive advantages over traditional methods:

- Huge Scalability: A single AI test agent can simulate thousands of concurrent calls, pushing your Voice AI to its breaking point to see how it performs under real pressure.

- Endless Variety: They can dream up countless test scenarios on their own, using different accents, adding background noise, interrupting, and asking complex, multi-part questions that a human tester would never even think of.

- Rock-Solid Consistency: Unlike human testers who have good days and bad days, AI agents perform with perfect consistency. That means you get reliable, repeatable results every single time.

- Far Deeper Insights: These agents do more than just give a pass/fail. They capture dozens of metrics on every call—from latency and sentiment to how well the AI followed instructions—giving you rich, actionable data.

The real power of an AI test agent is its ability to stop asking, "Does this feature work?" and start answering the much more important question: "How does our system actually perform under the messy, chaotic conditions of a real conversation?"

Traditional QA vs AI Test Agents for Voice AI

| Capability | Traditional QA | AI Test Agents |

|---|---|---|

| Scope | Limited to predefined scripts and scenarios. | Can autonomously generate thousands of unique, realistic scenarios. |

| Scalability | Extremely limited; requires hiring more people. | Massively scalable; can simulate thousands of concurrent calls. |

| Speed | Slow; manual execution and reporting. | Instantaneous; provides real-time feedback and analytics. |

| Coverage | Can only cover a fraction of possible user behaviors. | Achieves comprehensive coverage of edge cases and complex interactions. |

| Cost | High operational costs that grow with scale. | Low marginal cost per test; highly cost-effective at scale. |

Ultimately, while traditional QA has its place, it can't provide the confidence needed to launch a production-grade Voice AI system that customers will actually trust and enjoy using.

The Core Architecture of Modern AI Test Agents

To really get what makes AI test agents so powerful, you have to look under the hood. Their architecture isn't just one piece of software; it's more like a sophisticated, three-part assembly line built for quality assurance in Voice AI. Each stage has a specific, crucial job in turning raw data into real insights, making sure your system is solid and dependable.

Autonomous Test Generation

The bedrock of any serious testing platform is its ability to cook up relevant, tough scenarios all on its own. This is Autonomous Test Generation. Instead of being stuck with a limited library of pre-written scripts, these AI agents intelligently create new tests by learning from what's actually happening out in the wild.

For instance, when a live call drops or fails, a platform like Roark can instantly grab the details of that conversation—the caller's exact words, their accent, the background noise—and turn it into a repeatable, automated test. This creates an incredible feedback loop where every real-world failure makes your pre-deployment defenses stronger. The same mistake won't get you twice.

Intelligent Test Orchestration

So you have thousands of realistic test cases. Now what? How do you run them in a way that actually mimics real-world chaos? That's the job of Intelligent Test Orchestration. It's the air traffic controller of the system, managing and running countless tests at the same time to simulate peak call volumes and high-stress situations.

Orchestration is way more than just running tests one by one. It's about:

- Load Simulation: Smartly scheduling and executing thousands of simultaneous test calls to see how the system holds up under extreme pressure.

- Persona Management: Assigning specific personas to different tests, each with unique accents, speaking speeds, and even moods.

- Resource Allocation: Efficiently managing computing power to run massive test suites without any slowdowns or bottlenecks.

Intelligent orchestration ensures that you're not just testing what your AI can do, but testing how well it does it when everything is on the line.

Automated Evaluation and Insights

The final pillar is Automated Evaluation. After the tests are generated and orchestrated, the system has to automatically figure out what passed, what failed, and—most importantly—why. This is where AI test agents truly leave traditional methods in the dust, going way beyond simple pass/fail grades to deliver deep, nuanced analysis.

Using sophisticated models, these agents score conversations against dozens of critical metrics. A platform like Roark can capture over 40 built-in metrics on every single call. This includes measuring:

- Performance: Things like latency and how quickly the system responds.

- Accuracy: Did it understand the user's intent? Did it follow instructions?

- Quality: Gauging intangibles like sentiment, emotion, and repetition.

Essential Capabilities for Realistic Voice AI Testing

The real measure of an AI test agent is how well it can copy the messy, unpredictable reality of human conversation. If your system only passes clean, scripted tests, it's going to fall apart the moment it meets a real customer.

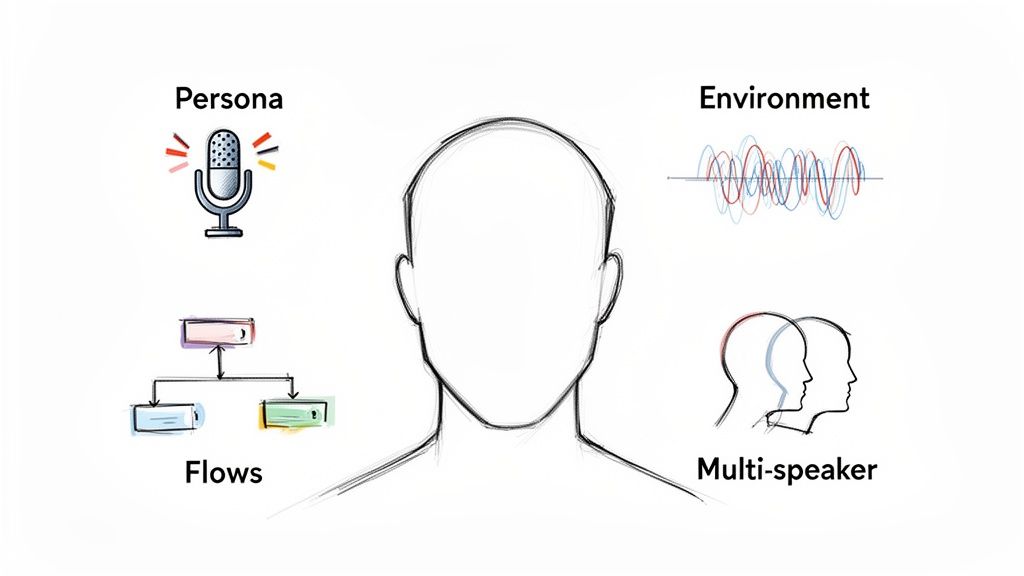

Advanced Persona Simulation

The most crucial capability is Persona Simulation. This isn't just about reading lines from a script; it's about embodying the full range of human callers. A top-tier AI test agent has to realistically mimic specific user archetypes to make sure your Voice AI works for everyone.

- Language and Accents: Testing with a standard American English accent just won't cut it. The agent needs to handle everything from a thick Scottish brogue to a rapid-fire Spanish speaker to find comprehension gaps.

- Vocal Characteristics: Simulating different pitches, speaking speeds, and even emotions like frustration or excitement is absolutely key.

- User Behaviors: The agent should be able to model different personalities, like the impatient caller who constantly interrupts or the hesitant one who needs a little extra time to respond.

Realistic Environmental Simulation

Conversations rarely happen in a soundproof booth. Environmental Simulation is the critical ability to inject the chaos of real-world audio into your tests. This is how you find out if your Voice AI can actually isolate a speaker's voice from background noise.

A truly robust Voice AI is one that can understand a customer's request over the sound of a barking dog, a blaring car horn, or the chatter of a crowded coffee shop. This is where environmental simulation proves its worth.

Navigating Dynamic Conversation Flows

Real conversations don't follow a straight line. They branch, backtrack, and get interrupted. A good AI test agent needs to navigate Dynamic Conversation Flows, moving well beyond rigid, step-by-step scripts.

For instance, if the Voice AI asks for an account number, a real person might say, "Hang on, I need to find my wallet," or ask, "Do you mean my member ID?" The test agent must be able to react to these detours and carry on the conversation logically, just like a human would.

For these very reasons, the market for AI test agents is seeing massive growth, projected to hit USD 182.97 billion by 2033. Platforms like Roark are designed to meet this need by capturing over 40 built-in metrics, including latency and sentiment.

Key Metrics and Common Pitfalls to Avoid

So you've got AI test agents running. That's a huge step. But here's the thing: their real power isn't just in running tests, it's in measuring what actually matters to your customers.

Essential Voice AI Quality Metrics

| Metric Category | Example Metric | Why It Matters for User Experience |

|---|---|---|

| Performance | Latency | Long, awkward pauses kill the conversational flow and make the AI feel slow and frustrating. |

| Performance | Response Time | This is the total "turnaround time" in a conversation. A slow response time makes the interaction feel clunky. |

| Accuracy | Intent Recognition | Did the AI get what the user wanted? Misunderstanding the goal is a guaranteed path to a bad experience. |

| Accuracy | Instruction Following | If a user asks for three things, the AI needs to do all three. Partial success is still a failure. |

| Conversational | Sentiment and Emotion | A sudden drop in user sentiment is a huge red flag that something went wrong in the conversation. |

| Conversational | Repetition Rate | If the user or AI keeps repeating themselves, it's a clear sign of a breakdown in understanding. |

Common Pitfalls and How to Avoid Them

Pitfall 1: Testing in a Sterile Environment

Teams test their AI in a perfectly quiet room with a crystal-clear, standard accent. It works flawlessly, and everyone celebrates. But real-world calls are never that clean.

Solution: Use environmental simulation to pump realistic background noise into your tests. Pair this with persona simulation to cover a wide range of accents and speaking styles.

Pitfall 2: Focusing Only on Accuracy

It's tempting to obsess over one number, like intent recognition accuracy. But a user can have a miserable time even if the AI is technically correct 100% of the time.

Solution: Balance the scorecard. Always pair your accuracy metrics with conversational quality metrics like sentiment analysis and latency.

Pitfall 3: Ignoring the "Why" Behind Failures

When a test fails, the knee-jerk reaction is to just log the bug and move on. This is a massive missed opportunity.

Solution: Dig deeper with a platform that gives you actionable insights. A tool like Roark can tell you why it failed—maybe high latency kicked in when the system tried to understand a specific accent layered with background noise.

Getting this right has a massive payoff. The market for AI agents is exploding at a 46.3% CAGR, and it's all driven by huge productivity gains. Businesses are reporting a median of 40% cost reductions and 23% faster workflows by automating complex tasks.

How to Implement and Integrate AI Test Agents

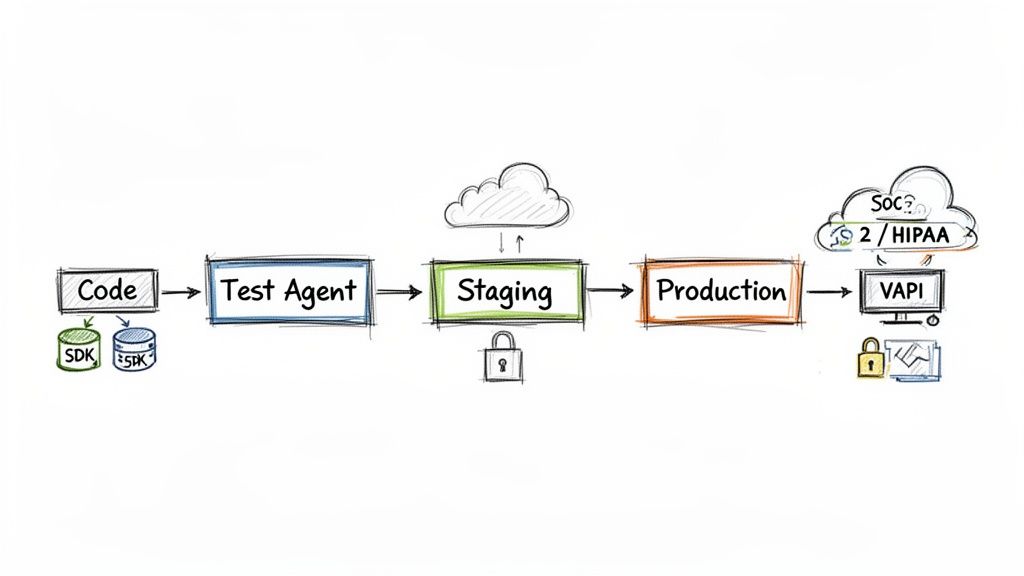

Getting AI test agents up and running is more than just a way to run more tests—it's about rethinking your entire approach to quality. Instead of a linear, reactive process, you build a continuous feedback loop that finally closes the gap between development and production.

Fortify Your Defenses with Pre-Deployment Testing

The smartest way to stop regressions in their tracks is to wire AI test agents directly into your CI/CD pipeline. This is Pre-Deployment Testing. Think of it as an automated quality bouncer that every single code change has to get past before it can be merged or shipped.

- Catch Regressions Early: Find and squash bugs at the cheapest possible stage—before they ever sniff production.

- Increase Developer Confidence: Teams can ship features faster, knowing there's a solid safety net ready to catch any unexpected side effects.

- Automate Quality Gates: You can enforce consistent quality standards on autopilot, taking the pressure off your manual QA team.

Learn from Reality with Post-Deployment Observability

Even with the world's best pre-deployment testing, some things only break in the wild. That's where Post-Deployment Observability comes in. This strategy uses AI test agents to listen in on live calls, analyze interactions, and—most crucially—learn from what goes wrong.

But here's the real magic: the platform automatically turns that real-world failure into a brand-new, repeatable automated test case. That new test then gets added to your pre-deployment suite.

Streamlining Integration and Ensuring Compliance

Getting started with AI test agents shouldn't feel like a six-month science project. Modern platforms are built for speed. Roark, for example, offers one-click integrations for popular Voice AI frameworks like VAPI and Retell, along with simple Node and Python SDKs that can have you up and running in minutes.

The latest data shows that 86% of enterprises are already using, testing, or planning to implement AI agents. They're seeing real results, too, with a median 40% reduction in cost-per-unit and a 23% faster time-to-market.

Of course, for teams in regulated spaces like healthcare or finance, security and compliance are table stakes. It's vital to pick a platform that provides options for SOC 2 and HIPAA compliance. This ensures you can tap into the power of AI testing without putting sensitive user data at risk.

Your Next Steps in Adopting AI Test Agents

So, where do you go from here? The conversation around AI test agents isn't about if you should adopt them anymore—it's about how to do it right. If you want to build a Voice AI that customers actually trust, this is the clearest path forward.

This isn't about just catching a few more bugs. It's about fundamentally rewiring your approach to quality. The real goal here is to create a powerful feedback loop where every single failure in production automatically makes your pre-deployment testing smarter.

Your Adoption Checklist

- 1. Audit Your Current Testing Gaps: First things first, figure out where your current QA process is leaking. Are you constantly getting tripped up by diverse accents? Is background noise wrecking your recognition rates? Get specific about the blind spots that AI agents are perfectly built to see.

- 2. Find Your Most Common Failures: Dig into your production logs and support tickets. What are the top three reasons your Voice AI is failing out in the wild? These repeat offenders are the perfect first targets for an AI agent to generate automated tests against.

- 3. Pilot an Integrated Platform: Please, don't try to build all this from scratch. Pick a unified testing and observability platform like Roark that's designed to connect pre-deployment testing with production monitoring.

The big idea is simple: a single platform connecting pre-deployment tests to production observability is the secret to building truly resilient Voice AI. It's about having one cohesive quality strategy, not two separate teams working in different worlds.

A Few Common Questions About AI Test Agents

Just How Technical Do I Need to Be to Use These?

This is a great question, and the answer is probably less than you think. While an engineer is usually the one to handle the initial setup—which often just means dropping in a few lines of code with an SDK or doing a one-click integration—the day-to-day use is a different story.

Once it's running, QA teams and even non-technical product managers can jump right in. They can build out test cases, dig into the analytics dashboards, and make sense of failure reports without ever touching the code.

Can AI Agents Really Handle How Unpredictable People Talk?

Absolutely. In fact, that's their superpower. Old-school, rigid scripts just can't simulate real conversations. AI test agents, on the other hand, are built on advanced language models that get context, handle interruptions, and navigate all the weird detours people take in a conversation.

The real magic of an AI test agent isn't just following a script. It's the ability to have a dynamic, responsive conversation that truly stress-tests your system's conversational smarts, not just its ability to follow a flowchart.

How Do These Agents Scale Up for Big Load Tests?

This is where they leave manual testing in the dust. AI test agents are cloud-native systems designed to simulate huge, concurrent call volumes without breaking a sweat. You can spin up a test that mimics thousands of simultaneous users calling your system, each with their own unique persona and even different background noise profiles.

This lets you accurately model those peak traffic moments—think a Black Friday sale or a sudden service outage—and see if your infrastructure can handle the pressure without performance tanking.

Ready to see how a unified QA and observability platform can change the game for your Voice AI development? See how Roark helps you monitor live calls, run powerful simulations, and turn every failure into an automated test. Get started at roark.ai.